Executive summary:

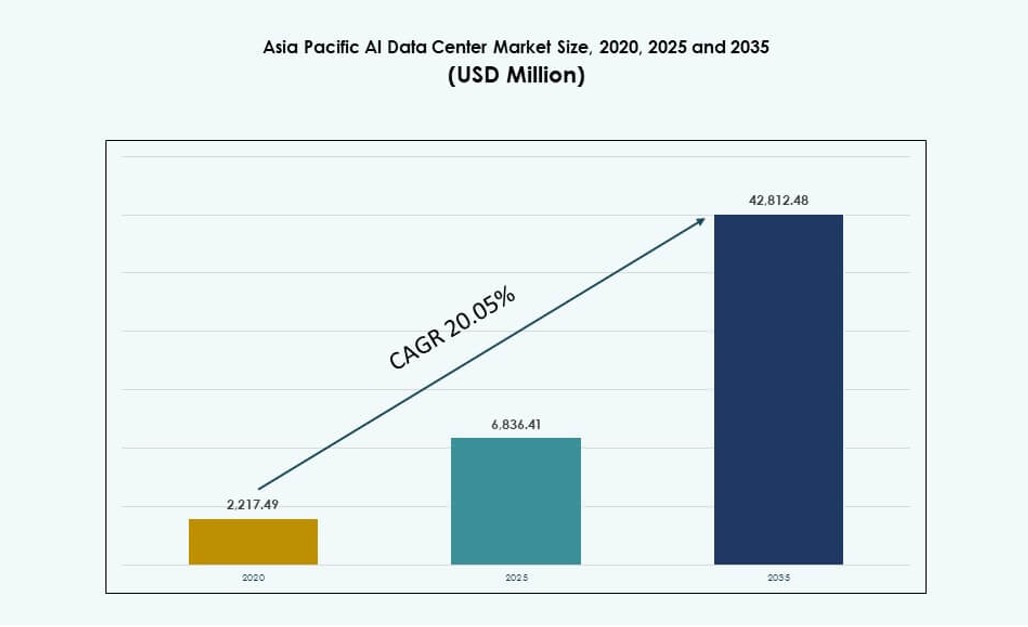

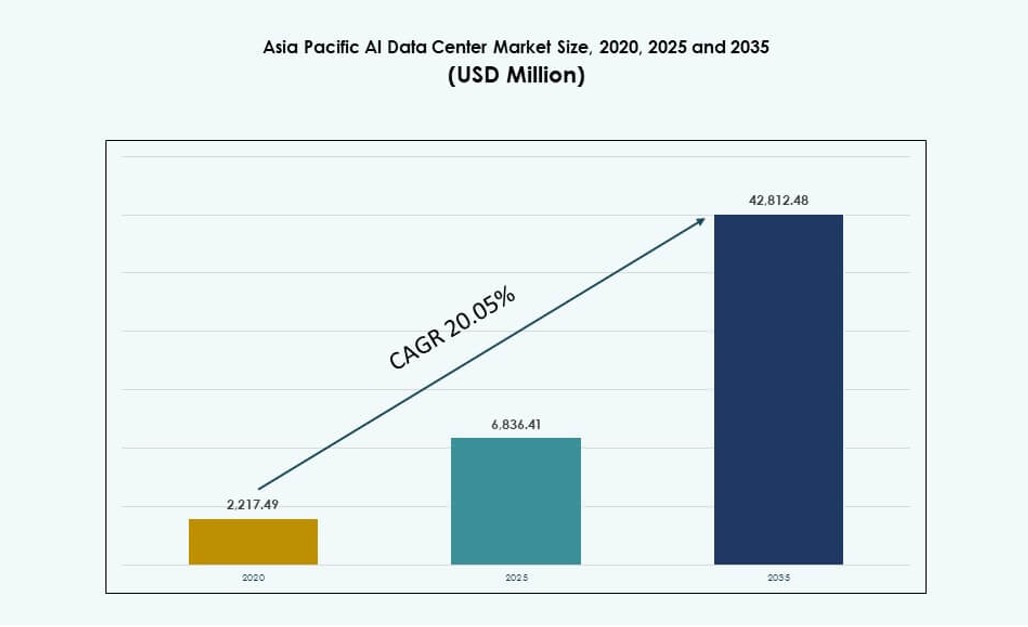

The Asia Pacific AI Data Center Market size was valued at USD 2,217.49 million in 2020 to USD 6,836.41 million in 2025 and is anticipated to reach USD 42,812.48 million by 2035, at a CAGR of 20.05% during the forecast period.

| REPORT ATTRIBUTE |

DETAILS |

| Historical Period |

2020-2023 |

| Base Year |

2024 |

| Forecast Period |

2025-2035 |

| Asia Pacific AI Data Center Market Size 2025 |

USD 6,836.41 Million |

| Asia Pacific AI Data Center Market, CAGR |

20.05% |

| Asia Pacific AI Data Center Market Size 2035 |

USD 42,812.48 Million |

The market is advancing due to growing demand for AI-specific infrastructure, widespread cloud expansion, and adoption of high-density GPU clusters. Enterprises are prioritizing investments in purpose-built facilities with optimized cooling, advanced interconnects, and AI orchestration platforms. Governments are supporting national AI frameworks with sovereign cloud policies and digital economy initiatives. Innovation in edge computing and hybrid architectures is reshaping infrastructure delivery models. Hyperscale firms and colocation providers are aligning data center designs to meet AI training and inference demands. The region attracts investors seeking long-term returns from scalable, sustainable AI infrastructure.

East Asia dominates the landscape, led by China, Japan, and South Korea due to strong cloud penetration, national AI strategies, and dense tech clusters. South Asia, especially India, is emerging fast, driven by hyperscale expansion and AI skill availability. Southeast Asia and Oceania show strong momentum, with Singapore, Indonesia, and Australia investing in AI zones and edge deployments. The region balances centralized and distributed architectures to meet local data regulations and latency needs. The Asia Pacific AI Data Center Market reflects a dynamic shift toward localized AI capacity with regional growth drivers supporting broader digital transformation.

Market Dynamics:

Market Drivers

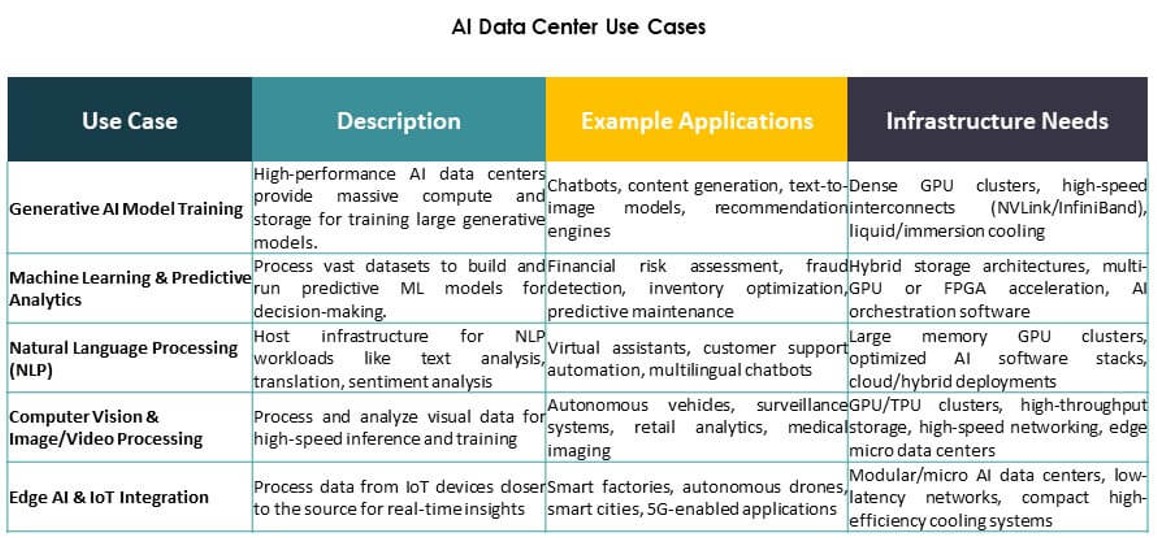

Surge in AI Workload Requirements Driving High-Density GPU Infrastructure and New Facility Builds

The Asia Pacific AI Data Center Market is expanding rapidly due to growing demand for high-performance computing. AI models now require dense GPU clusters and fast interconnects, pushing data centers to redesign existing infrastructure. Enterprises are shifting from traditional CPU-centric servers to GPU and AI-accelerated hardware. This hardware shift increases the need for cooling efficiency, energy optimization, and rack-level density planning. Operators are investing in next-gen AI training and inference environments. Hyperscale and cloud-native platforms are prioritizing AI compute availability across regional hubs. Strategic importance rises as APAC becomes central to AI deployment scalability. It serves both global players and local AI startups seeking performance, scale, and reach.

- for instance, NVIDIA H100 Tensor Core GPUs deliver 3,958 teraFLOPS of FP8 Tensor Core performance per GPU.

Increased Cloud AI Adoption and Platform Integration by Tech Giants and Government AI Missions

Cloud service providers are embedding AI tools directly into their platforms, expanding infrastructure in the process. Hyperscalers such as AWS, Google Cloud, and Azure are establishing regional availability zones to serve local AI workloads. They offer pre-built AI pipelines, APIs, and training environments that attract enterprise and developer demand. National AI strategies across APAC, including India, China, and Singapore, accelerate cloud-based AI development. Strategic initiatives fund data center development, sovereign AI infrastructure, and GPU cluster deployment. Enterprises prefer regionalized AI training environments for latency, data governance, and compliance. The Asia Pacific AI Data Center Market gains from this convergence of cloud, AI, and national policy. It positions the region as a preferred launchpad for enterprise-scale AI initiatives.

- For instance, India’s AIRAWAT-PSAI supercomputer includes 656 NVIDIA A100 GPUs and supports 410 AI petaflops of compute capacity. It serves as a national AI research infrastructure under India’s AI and supercomputing missions.

Growth in Industry-Specific AI Applications Across Telecom, Healthcare, and Manufacturing Verticals

Telecom firms are deploying AI to optimize 5G infrastructure, network slicing, and edge processing. In healthcare, providers use AI to improve diagnostics, imaging, and hospital workflow automation. Manufacturing firms leverage AI-driven analytics for predictive maintenance, defect detection, and robotics control. This sectoral adoption leads to vertical-specific data center builds. Operators now co-locate AI environments near production centers or research campuses. AI training cycles demand lower latency, edge inference capability, and high-throughput backhaul. New workloads in computer vision and real-time analytics pressure traditional facilities. The Asia Pacific AI Data Center Market addresses these vertical needs with tailored capacity and deployment models. It becomes integral to digital transformation across major industries.

Edge AI and Micro Data Center Deployments Transforming Infrastructure Delivery Models Across Urban Hubs

Demand for low-latency inferencing in retail, mobility, and IoT leads to distributed deployments. Edge AI models require fast data processing near user endpoints. Countries like Japan, South Korea, and Australia see early adoption of edge-ready micro data centers. Retail chains, autonomous systems, and city infrastructure integrate AI for smart operations. These trends shift investment away from centralized training alone toward decentralized execution. New formats include containerized AI nodes, modular edge units, and smart retail pods. They connect to central cloud nodes via fiber and 5G, forming hybrid topologies. The Asia Pacific AI Data Center Market benefits from this edge-core integration, enabling responsive, AI-native services across cities. It supports critical sectors requiring instant, intelligent decision-making.

Market Trends

Rising Liquid Cooling Adoption to Enable 40–100 kW Rack Densities in AI Workloads

Air-based cooling systems face limitations in managing rising heat loads from GPU-based AI servers. Liquid cooling, especially direct-to-chip and rear door heat exchangers, gains momentum. Operators retrofit or redesign their facilities to support 40–100 kW per rack. This shift enables dense AI clusters for training large foundation models. Data centers in Singapore, India, and South Korea are early adopters of liquid-cooled racks. Technology suppliers offer modular systems compatible with retrofit and greenfield builds. Deployment of immersion cooling for HPC AI workloads is also expanding. The Asia Pacific AI Data Center Market reflects this thermal transition as AI becomes more compute-intensive. It drives capex toward sustainable, high-density operations.

Integration of AI-Specific Interconnects and NVLink-Compatible Infrastructure Enhancing Compute Efficiency

High-bandwidth interconnects are critical for linking GPUs in AI clusters. Facilities now require topologies supporting NVIDIA NVLink, PCIe Gen5, and Ethernet/Fabric enhancements. These features enable model training across multiple nodes with minimal latency and high throughput. Emerging centers support AI supercomputers, large-scale inference, and multi-GPU optimization. Vendors integrate topology-aware orchestration tools to reduce training time. Deployment of RDMA and smart networking layers enhances performance for distributed AI jobs. The Asia Pacific AI Data Center Market evolves to support these specifications for hyperscale and colocation customers. It enhances regional competitiveness for global AI deployments.

Shift Toward AI-Optimized Colocation Suites With Pre-Provisioned Compute and Thermal Zones

Colocation providers are developing dedicated AI zones with pre-approved power, cooling, and rack footprints. These suites cater to customers who want fast deployment without redesign cycles. Such zones include structured cabling, GPU-ready racks, and enhanced air handling or liquid compatibility. Clients benefit from reduced provisioning times and predictable AI workload support. Regions such as Hong Kong and Tokyo are leading in ready-to-deploy AI colocation. Smaller enterprises use these zones to test and train smaller LLMs without hyperscale capex. The Asia Pacific AI Data Center Market reflects this modularization of colocation services tailored for AI clients. It supports hybrid adoption models at scale.

Emergence of Sovereign AI Zones Backed by National AI Cloud and Research Frameworks

Governments are funding data centers with secure infrastructure for AI model development under sovereign frameworks. These zones support national language models, industry AI initiatives, and regulated data training. Infrastructure partners deploy secured GPU clusters with restricted access and audited AI pipelines. Examples include India’s Bhashini initiative and China’s provincial AI zones. These developments create localized demand for AI-native compute within national borders. Operators work with public institutions to align infrastructure with policy goals. The Asia Pacific AI Data Center Market incorporates this government-industry collaboration into its structure. It positions the region as a leader in sovereign AI readiness.

Market Challenges

Power Constraints, Land Scarcity, and Environmental Pressures in Dense Urban AI Clusters

Power availability has become a bottleneck in cities such as Tokyo, Singapore, and Seoul. AI data centers demand high and stable power loads, but urban grids face competing requirements. Land scarcity and strict zoning make new builds difficult. Permitting delays often hinder hyperscale construction timelines. Operators must navigate evolving energy regulations and carbon targets. Liquid cooling systems also require significant water resources in some designs. Sustainability mandates create tension between density goals and resource limitations. The Asia Pacific AI Data Center Market must resolve these challenges to maintain its growth momentum. It needs long-term coordination with utility, municipal, and regulatory bodies.

Limited Access to AI-Capable Hardware and Talent Delays Deployment Timelines Across Developing Economies

Supply chain gaps restrict timely access to high-end GPUs like NVIDIA H100 or AMD MI300. Smaller countries in Southeast Asia and South Asia struggle to secure these components. Lead times extend deployment and limit innovation outside major hubs. Similarly, lack of trained engineers and AI infrastructure architects delays project readiness. Emerging markets face skill shortages in orchestration, monitoring, and workload optimization. Cloud providers offer managed AI services, but these still rely on core infrastructure availability. The Asia Pacific AI Data Center Market experiences uneven growth where talent and hardware access remain low. Addressing this requires regional training and strategic vendor partnerships.

Market Opportunities

Expansion of Enterprise AI Across Mid-Sized Firms Driving Colocation and Hybrid Deployment Demand

Enterprises across manufacturing, logistics, and retail are scaling internal AI teams. These firms require scalable but cost-effective compute environments. AI-ready colocation provides flexibility with strong performance and low capex. Regional operators bundle GPU hardware, orchestration, and compliance features. This opens growth in cities beyond Tier-1 hubs. The Asia Pacific AI Data Center Market supports these needs through modular capacity and hybrid service models. It aligns with SME digitization and sector-specific innovation.

Public Sector Investments in AI Research Infrastructure Creating Long-Term Regional Demand

National governments fund AI centers linked to education, defense, and public health. These centers demand stable GPU clusters and localized compute. Infrastructure partnerships ensure performance, security, and data residency compliance. Operators align offerings with public research goals. The Asia Pacific AI Data Center Market benefits from this consistent investment cycle. It sustains demand across political and fiscal timelines.

Market Segmentation

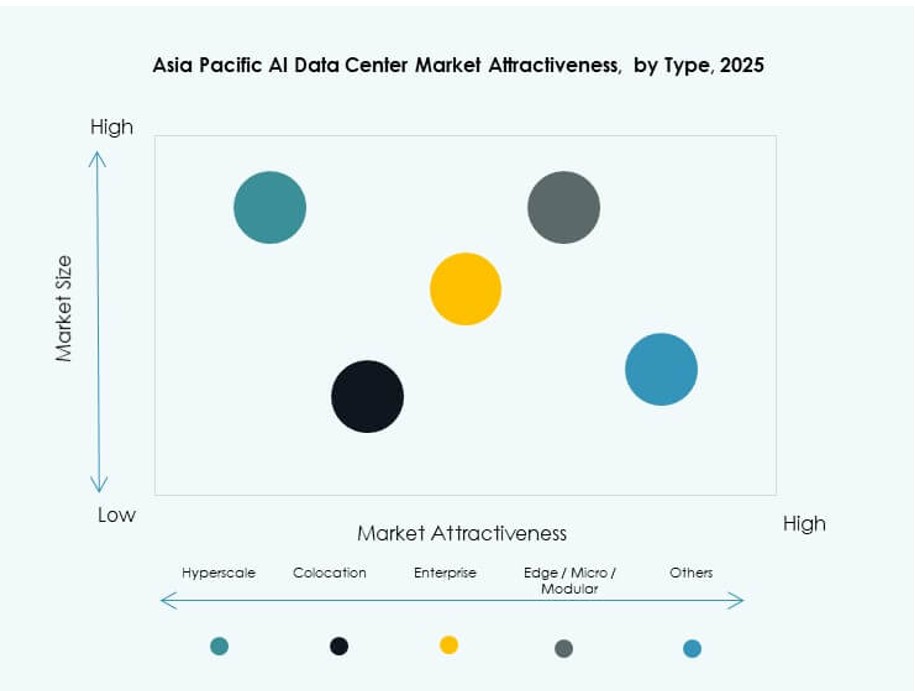

By Type

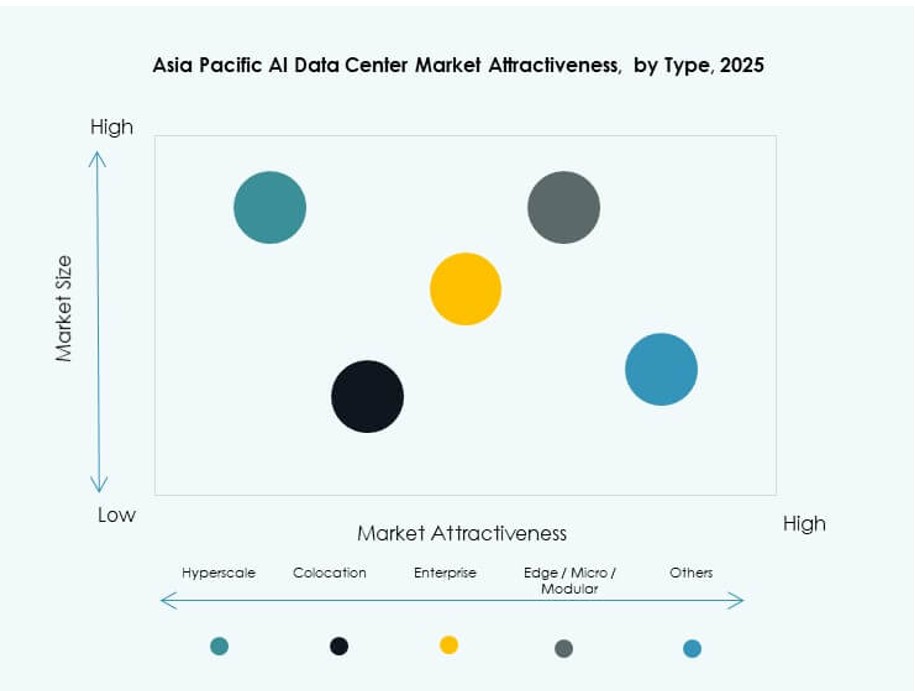

Hyperscale data centers dominate the Asia Pacific AI Data Center Market with significant share due to major investments by global cloud and AI firms. These facilities offer massive scale, high GPU density, and integration with AI accelerators. Colocation and enterprise deployments remain strong in metros with regulated AI environments. Edge and micro data centers are growing across Southeast Asia to meet low-latency requirements for retail, mobility, and smart city applications.

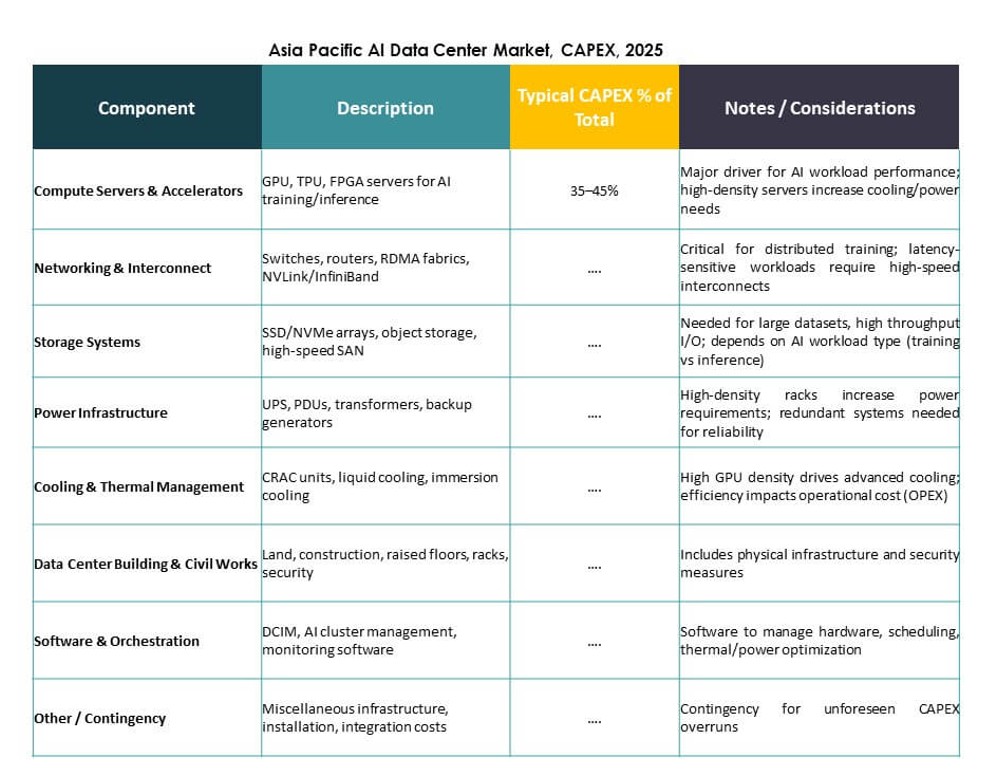

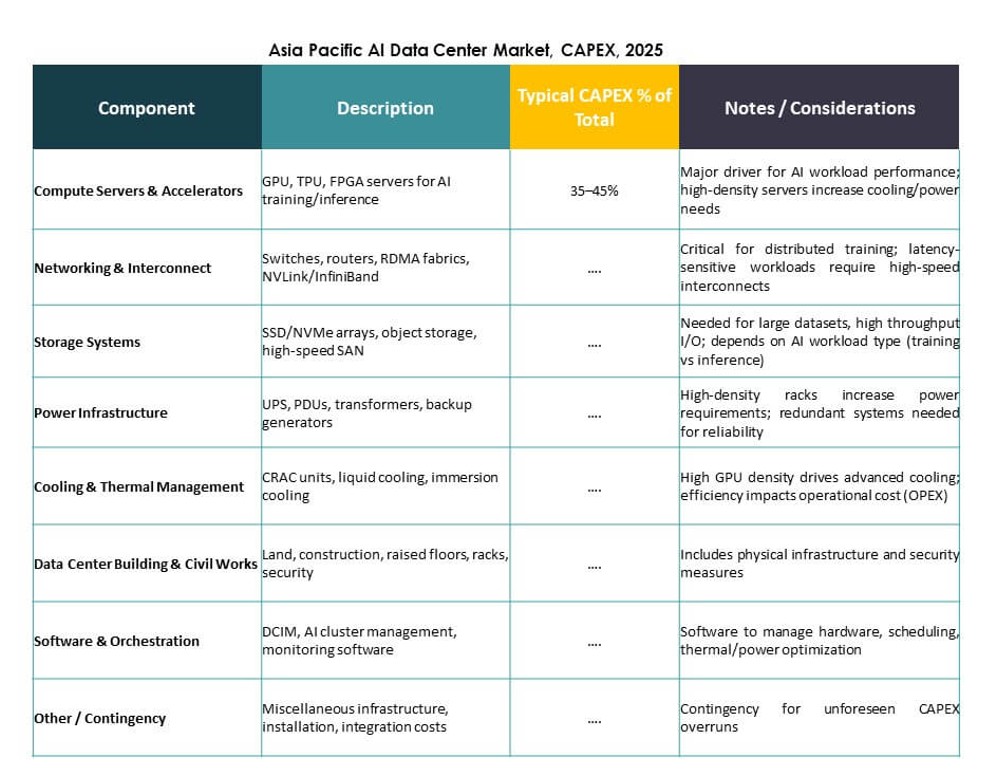

By Component

Hardware represents the largest share due to extensive GPU, CPU, and storage requirements for AI workloads. Major investments target high-bandwidth interconnects, power modules, and thermal management. Software and orchestration are gaining ground as AI training demands advanced workload scheduling, cluster scaling, and container management. Services segment grows with demand for design, consulting, and managed operations in AI-specific environments.

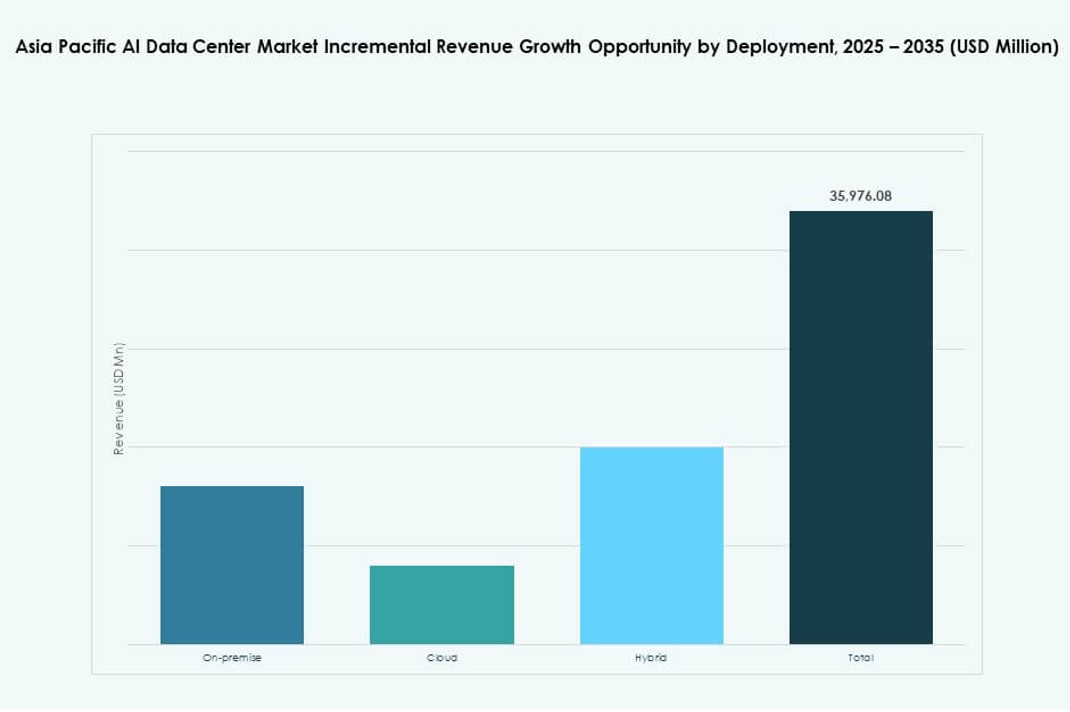

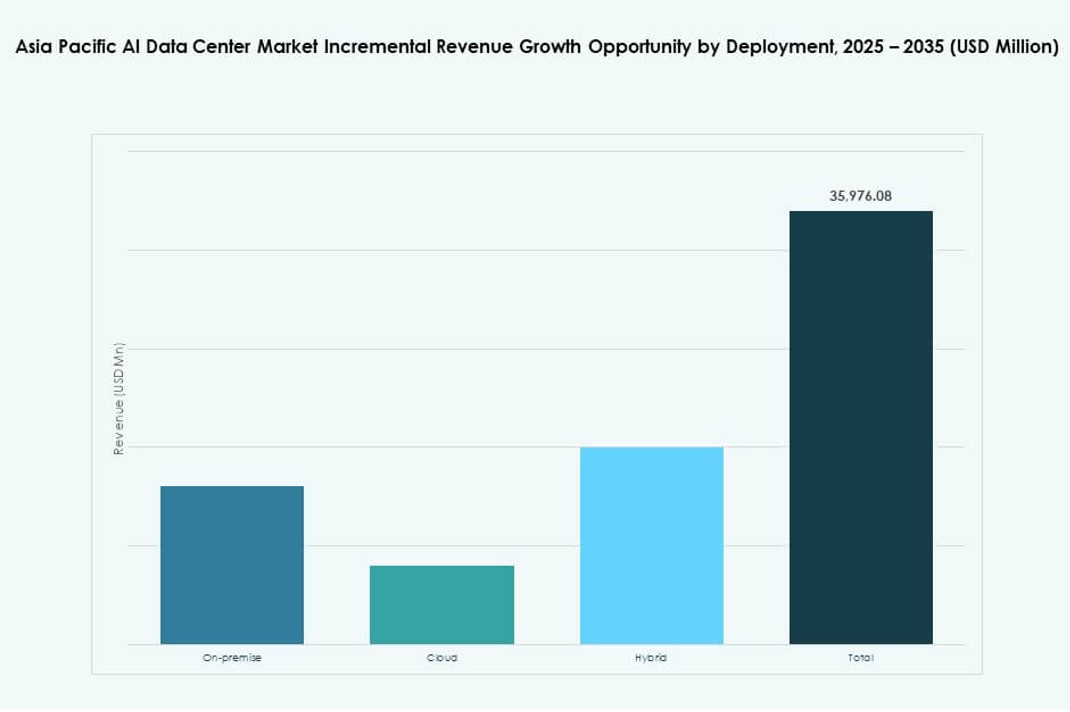

By Deployment

Cloud-based AI deployment leads the market, driven by hyperscaler expansion and SME adoption of managed AI infrastructure. On-premise remains relevant for regulated industries and AI training in controlled environments. Hybrid deployment models are rising as enterprises balance cloud scalability with edge-based inference and latency-sensitive use cases. The Asia Pacific AI Data Center Market aligns with varied enterprise maturity levels across subregions.

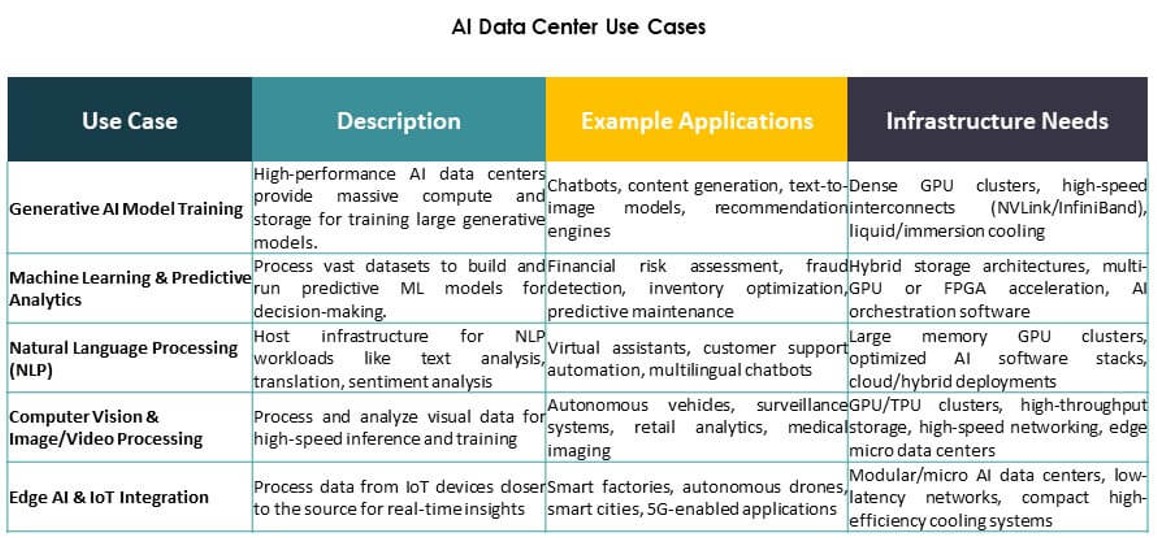

By Application

Machine Learning holds the largest application share, especially in enterprise AI and industrial analytics. Generative AI is the fastest-growing segment, fueled by foundation model training and LLM inference. Computer Vision drives demand in surveillance, retail, and industrial automation. NLP powers contact centers, chatbots, and translation tools. Other applications include recommendation engines and time-series forecasting across BFSI and logistics.

By Vertical

IT and Telecom lead the vertical segmentation, driven by core AI infrastructure and 5G optimization use cases. BFSI and Retail sectors follow closely, focusing on fraud detection, personalization, and chatbot services. Healthcare is emerging with rapid growth in AI diagnostics and hospital automation. Automotive and manufacturing demand grows with AI-driven production, robotics, and R&D. The Asia Pacific AI Data Center Market supports cross-sector transformation through vertical-aligned infrastructure.

Regional Insights

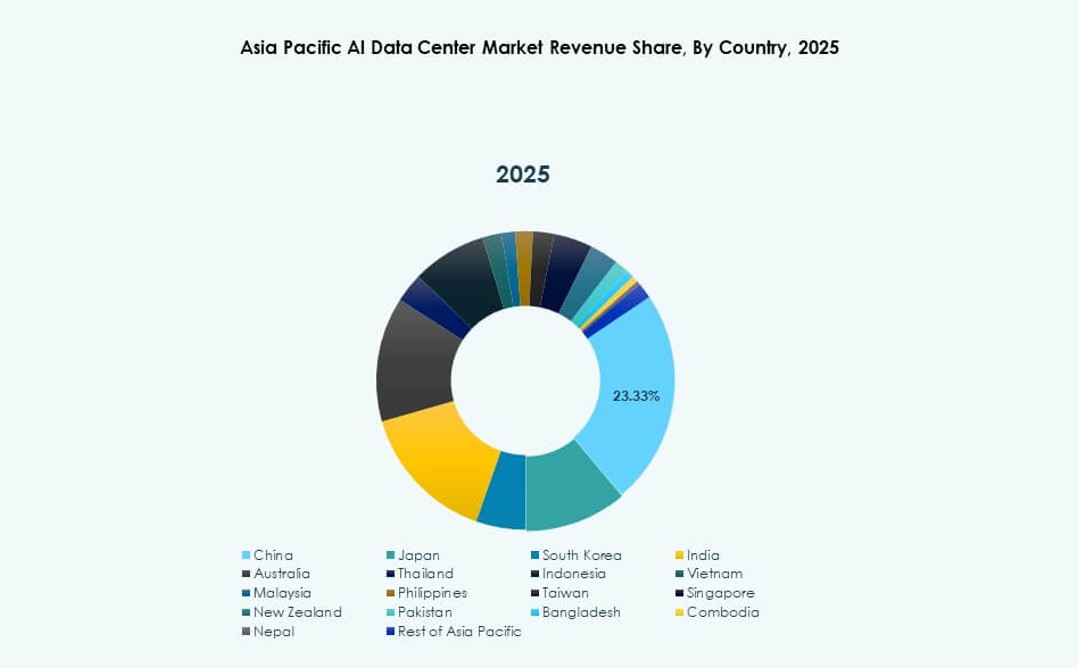

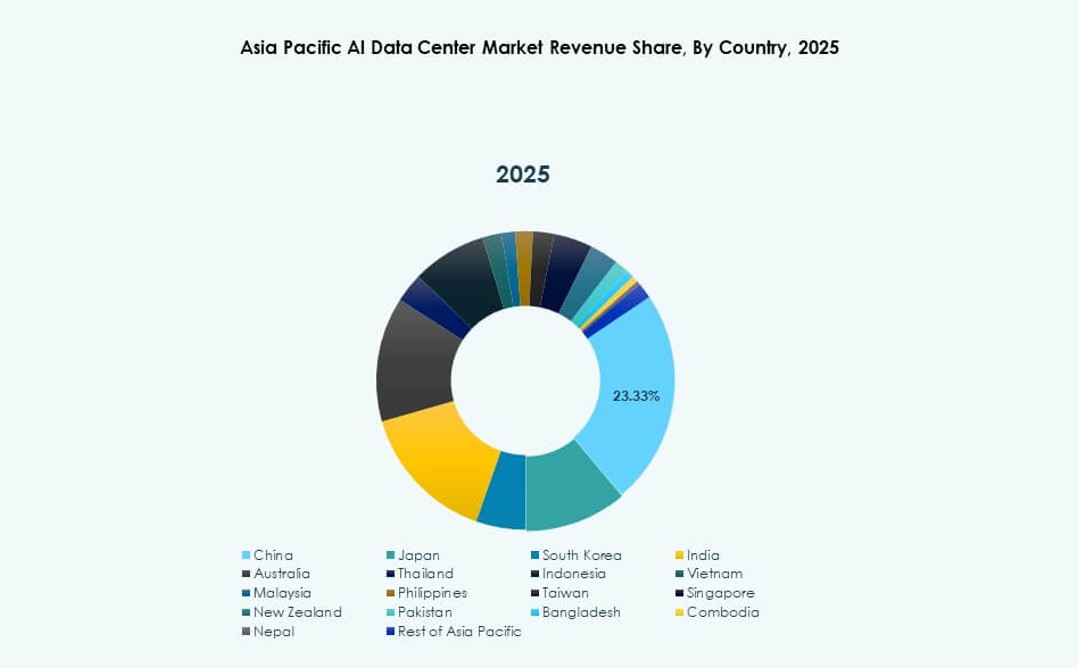

East Asia Dominates With Over 42% Market Share Led by China, Japan, and South Korea

East Asia holds the largest share of the Asia Pacific AI Data Center Market at over 42%. China leads with massive public and private investment in AI supercomputing hubs. Japan and South Korea contribute through robotics, edge AI, and national AI policy execution. These countries benefit from dense urban tech clusters, strong energy infrastructure, and semiconductor ecosystems. Hyperscale and sovereign AI deployments converge here to drive regional leadership. It remains the AI infrastructure anchor in APAC.

- For instance, Alibaba Cloud’s Tongyi Qianwen (Qwen-7B) model achieved a 56.7 score on the MMLU benchmark across 57 tasks in 2023.

South Asia Emerging With Over 26% Share Driven by India’s Cloud Expansion and Talent Base

South Asia accounts for over 26% of the Asia Pacific AI Data Center Market. India anchors this growth with strong developer base, hyperscale cloud zones, and national AI frameworks. Investment from AWS, Microsoft, and local firms accelerates training and inference capabilities. Bangladesh and Pakistan are early in development but show demand from fintech and healthcare. South Asia gains traction through affordability, skilled talent, and rising enterprise demand. It evolves into a regional AI compute hub.

- For instance, in September 2023, Reliance Jio partnered with NVIDIA to build AI infrastructure in India using NVIDIA GH200 Grace Hopper superchips and DGX Cloud systems. The collaboration supports large-scale AI workloads through AI-ready data centers operated by Jio Platforms.

Southeast Asia and Oceania Capture Nearly 32% Share Backed by Strategic Location and AI Readiness

Southeast Asia and Oceania together hold close to 32% market share. Singapore, Indonesia, and Australia lead infrastructure deployment through regional AI partnerships. Edge deployments, 5G integration, and sustainable cooling innovation stand out. Thailand, Vietnam, and the Philippines see strong GenAI and edge AI use cases in commerce and education. This subregion balances global cloud access and localized innovation. The Asia Pacific AI Data Center Market benefits from its centrality and digital ecosystem strength.

Competitive Insights:

- ST Telemedia Data Centres

- Princeton Digital Group

- AirTrunk

- Microsoft (Azure)

- Amazon Web Services (AWS)

- Google Cloud

- Meta Platforms

- NVIDIA

- Dell Technologies

- Equinix

The Asia Pacific AI Data Center Market is highly competitive, shaped by hyperscalers, regional colocation providers, and global hardware vendors. Operators like ST Telemedia, Princeton Digital, and AirTrunk lead in facility deployment across high-growth countries. Cloud giants including Microsoft, AWS, and Google continue to expand AI training zones across urban and edge nodes. Meta and NVIDIA push demand through foundation model scaling and hardware integration. Hardware leaders such as Dell and Equinix enable infrastructure flexibility, aligning with evolving AI workloads. Companies compete on cooling innovation, energy efficiency, and AI-specific compute density. It remains dynamic, with strong regional expansion, ecosystem partnerships, and sovereign AI zone alignment driving sustained competition.

Recent Developments:

- In October 2025, AirTrunk formed a strategic partnership with Humain and Blackstone to develop next-generation AI data centres in Saudi Arabia, starting with a $3 billion campus investment aimed at hyperscale and AI infrastructure

- In October 2025, Aligned Data Centers was acquired by BlackRock and MGX in a record‑setting $40 billion deal that ranks among the largest infrastructure acquisitions ever. The transaction will expand Aligned’s footprint and accelerate its deployment of hyperscale and AI‑optimized data centres globally.

- In April 2025, ST Telemedia Global Data Centres launched an AI-ready data centre campus in Kolkata, India, with an investment of INR 450 crore to support growing AI workloads in the Asia Pacific region.