Executive summary:

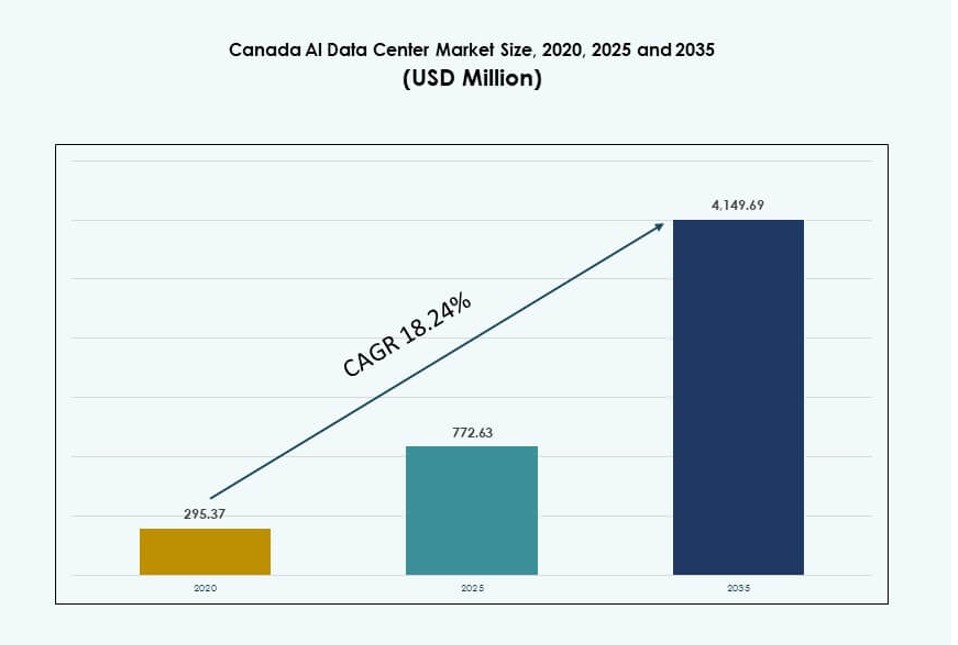

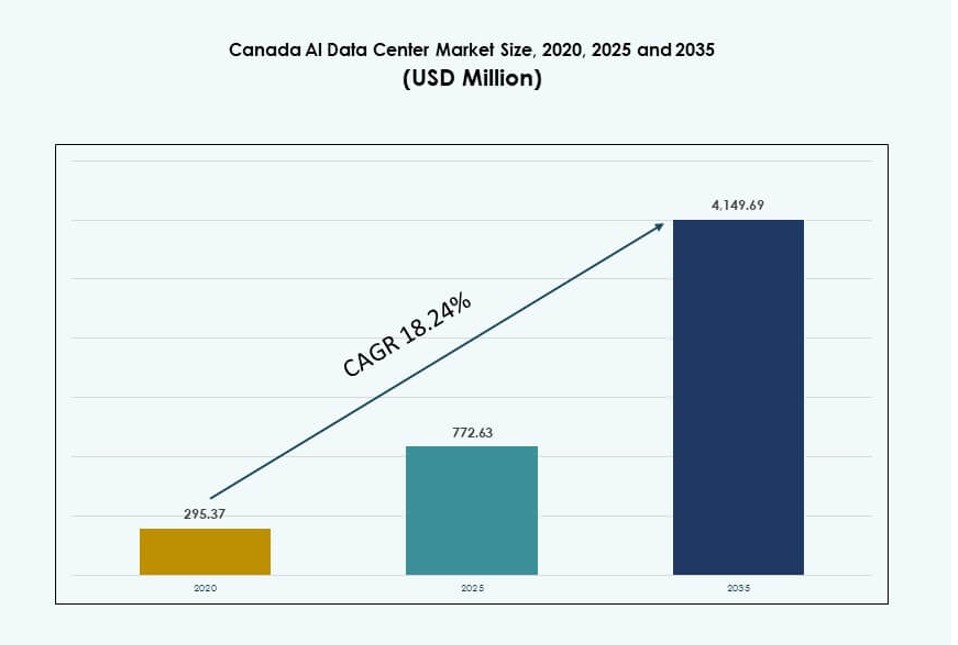

The Canada AI Data Center Market size was valued at USD 295.37 million in 2020 to USD 772.63 million in 2025 and is anticipated to reach USD 4,149.69 million by 2035, at a CAGR of 18.24% during the forecast period.

| REPORT ATTRIBUTE |

DETAILS |

| Historical Period |

2020-2023 |

| Base Year |

2024 |

| Forecast Period |

2025-2035 |

| Canada AI Data Center Market Size 2025 |

USD 772.63 Million |

| Canada AI Data Center Market, CAGR |

18.24% |

| Canada AI Data Center Market Size 2035 |

USD 4,149.69 Million |

The market is driven by rising demand for high-performance computing, rapid adoption of generative AI models, and increased deployment of GPU-accelerated infrastructure. Operators are investing in liquid cooling, advanced orchestration, and dense rack configurations to support AI training and inference workloads. Government incentives and sustainability goals are pushing adoption of clean energy-powered facilities. Cloud providers, hardware vendors, and local operators are expanding footprints to meet AI-specific needs. Infrastructure agility and energy efficiency have become key decision factors. The market offers strong investment returns through scalable deployments.

Ontario and Quebec lead the market due to abundant renewable energy, low power costs, and proximity to enterprise clients and AI research hubs. Toronto and Montreal have emerged as core hyperscale zones supporting national AI clusters. Alberta is an emerging region, gaining attention for its cooling-friendly climate and recent large-scale investments. British Columbia supports edge deployments tied to local industries and AI applications. These regional variations reflect power availability, network connectivity, and policy support. It creates a distributed but integrated national AI infrastructure base.

Market Dynamics:

Market Drivers

Rising Integration of High-Density AI Workloads Across Hyperscale and Enterprise Facilities

Hyperscale operators and large enterprises are expanding GPU-intensive workloads, requiring higher rack densities. AI training models demand racks exceeding 30 kW per cabinet, driving infrastructure upgrades. The Canada AI Data Center Market supports diverse AI use cases across NLP, CV, and GenAI. Facilities are adopting advanced cooling systems and smart power distribution to manage thermal loads. High-density AI servers accelerate the shift from traditional IT infrastructure. AI readiness has become a key metric in site selection and facility design. Businesses invest in scalable architectures to ensure continuous performance. Strategic deployments support national AI adoption goals and cross-industry transformation. It drives long-term infrastructure investments.

Government Funding and AI Policy Incentives Driving Infrastructure Development

Federal and provincial programs provide grants, tax incentives, and funding for AI ecosystem development. Policies emphasize data sovereignty, clean energy, and sustainable AI innovation. These programs boost investor confidence and accelerate facility launches. The Canada AI Data Center Market benefits from public-private AI partnerships focused on research and infrastructure. Designations of AI hubs in cities like Montreal and Toronto encourage hyperscale builds. Public cloud expansions align with government-backed AI strategy. Policy clarity helps streamline approvals and grid interconnection processes. Investors view Canada’s stable regulatory climate as a competitive advantage. It promotes growth in AI-powered infrastructure.

- For instance, the Quebec government committed CAD 100 million through 2025 to support Mila’s AI infrastructure, boosting national compute capacity. The initiative enables large-scale GPU clusters, including NVIDIA H100 systems, for advanced research and model training.

Strong Renewable Energy Availability and Focus on Sustainable AI Operations

Canada’s abundant hydroelectric resources reduce power costs and carbon footprints in AI data centers. Hyperscalers choose Canadian sites to align with global ESG mandates. Green energy access supports sustainability goals and long-term cost savings. The Canada AI Data Center Market attracts operators seeking clean power without compromising performance. Regions like Quebec and British Columbia offer low PUE advantages. Operators deploy liquid and rear-door cooling for efficiency. Long-term power purchase agreements ensure grid reliability and fixed rates. Clean energy attracts AI training workloads from global clients. It reinforces Canada’s position in sustainable infrastructure.

Edge and Regional Expansion to Serve Localized AI Use Cases and 5G Growth

Edge and micro data centers are expanding across Tier II and Tier III cities to support low-latency AI applications. Healthcare imaging, retail analytics, and automotive use cases require proximity to end users. The Canada AI Data Center Market supports regional compute zones with smaller, AI-ready nodes. Localized infrastructure helps reduce backhaul congestion and improve inference response times. Edge AI supports emerging 5G deployments and real-time services. Distributed models lower risk and enable modular scaling. Retail, banking, and public sector players drive regional edge demand. AI containerized workloads allow flexible deployment models. It strengthens Canada’s edge computing landscape.

- For instance, Bell Canada deployed NVIDIA A100 GPUs across its 5G edge cores in Ontario and Quebec, supporting real-time analytics in over 20 cities. The infrastructure enables sub-5 ms latency for AI-driven edge applications.

Market Trends

Growing Adoption of Liquid Cooling and Direct-to-Chip Thermal Management Systems

Operators are investing in advanced cooling technologies to support rising rack power densities. Direct-to-chip and immersion cooling systems are becoming standard in new AI builds. The Canada AI Data Center Market is witnessing higher deployment of liquid-based cooling to reduce PUE. These technologies improve thermal management efficiency for dense AI workloads. Hyperscalers are prioritizing cooling innovation in site planning. Rear-door heat exchangers and cold plate integration are being adopted at scale. Facilities pair liquid cooling with airflow optimization and real-time monitoring. Cooling system selection now directly influences rack-level AI performance. It reshapes facility design strategies.

Shift Toward Modular and Prefabricated AI Data Center Deployment Models

Faster deployment timelines are driving demand for modular infrastructure tailored for AI use cases. Vendors offer prefabricated units with integrated power, cooling, and rack systems. The Canada AI Data Center Market sees growing use of containerized data halls in remote or edge zones. Modular builds shorten time-to-AI-readiness and reduce on-site labor. Prefab units support phased scaling for GPU expansion. Operators use modular layouts for redundancy and workload isolation. These designs help meet fast-evolving AI demand without full facility overhauls. It enables scalable, repeatable infrastructure across multiple locations.

AI-Driven Infrastructure Management Through DCIM and Predictive Maintenance Tools

Facility operators are adopting AI-powered data center infrastructure management (DCIM) platforms. These tools provide real-time visibility into energy, airflow, rack health, and asset tracking. The Canada AI Data Center Market leverages predictive analytics to reduce downtime and improve efficiency. DCIM with machine learning models enables smart cooling control and power balancing. Automated alerts prevent thermal spikes in high-density racks. AI tools optimize capacity planning and SLA performance. Real-time dashboards enhance transparency for clients with AI workloads. It improves lifecycle management for high-value infrastructure assets.

Demand for GPU-as-a-Service and AI Workload Placement in Hybrid Models

Service providers are offering GPU-as-a-Service platforms for clients without in-house AI infrastructure. Enterprises prefer hybrid AI deployment models with both on-prem and cloud GPU access. The Canada AI Data Center Market supports this with GPU-ready colocation and cloud-native designs. High-speed interconnects and fabric-based architectures enable efficient workload placement. Demand for Kubernetes-native GPU clusters is rising among developers. Operators are integrating NVMe-over-Fabric and RDMA to support fast inference cycles. AI users require flexibility in workload orchestration and multi-zone deployment. It shapes rack design, network layers, and service models.

Market Challenges

High Infrastructure Costs and Long Procurement Cycles for AI-Optimized Equipment

Deploying AI-ready data centers involves costly investments in GPUs, liquid cooling, and power systems. Long lead times for high-performance hardware delay buildouts and capacity upgrades. The Canada AI Data Center Market faces pressure from global competition for chips and components. Equipment vendors prioritize large U.S. or Asian clients, reducing availability for Canadian operators. Facility costs increase with dense rack configurations and specialized cooling. Budget constraints impact small and medium operators seeking AI infrastructure upgrades. Sourcing delays disrupt project timelines and tenant onboarding. Businesses must balance CapEx with future AI demand. It creates deployment bottlenecks across regions.

Grid Interconnection Delays and Regional Power Distribution Constraints

Interconnecting high-density AI data centers to the local grid poses technical and regulatory hurdles. Certain regions face transformer shortages or substation capacity limits. The Canada AI Data Center Market encounters project delays tied to utility coordination. Rapid AI growth puts pressure on grid infrastructure that was not designed for high power draw. Zoning approvals, environmental assessments, and utility agreements extend project timelines. Grid congestion in urban zones restricts expansion of dense GPU workloads. Operators explore alternative energy setups, but regulations slow implementation. It limits fast-track development of new AI hubs.

Market Opportunities

National AI Clusters and Research Institutions Driving Regional Growth

The AI cluster ecosystem in Canada creates demand for local AI infrastructure. Universities, startups, and research labs partner with data center providers. The Canada AI Data Center Market benefits from proximity to centers of AI excellence. Academic and commercial collaborations support sustained compute demand. Growth in public AI investments enables regional facility launches. It supports long-term AI adoption across industries.

Surging Demand for Inference Workloads Across BFSI and Retail Sectors

AI inferencing for fraud detection, personalization, and chatbots is rising across enterprises. BFSI and retail clients seek low-latency infrastructure in major metros. The Canada AI Data Center Market enables near-real-time inference with GPU-ready racks. Demand for inference-specific deployment zones opens new service offerings. It creates growth beyond large training clusters.

Market Segmentation

By Type

The hyperscale segment dominates the Canada AI Data Center Market due to large-scale GPU cluster demand. Hyperscalers deploy dense racks supporting AI model training and cloud AI services. Colocation and enterprise facilities follow, serving hybrid and private AI needs. Edge and micro data centers are emerging to support latency-sensitive AI inference. These smaller nodes expand AI reach across non-metro regions. Growth in all types aligns with AI model complexity and use case diversity.

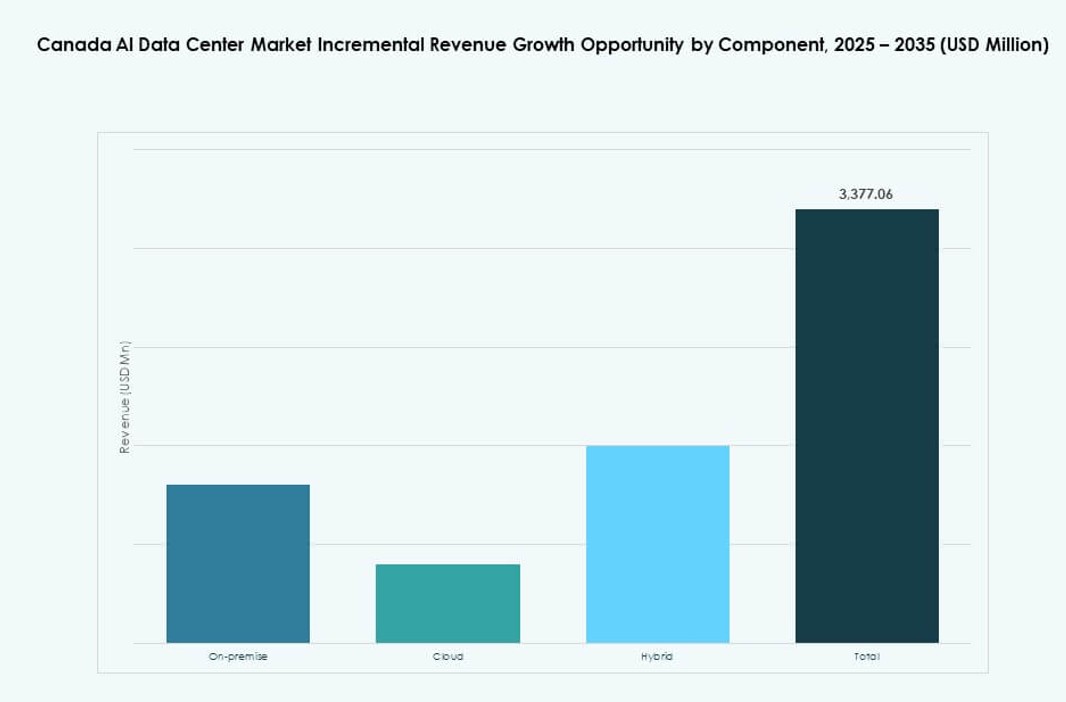

By Component

Hardware leads the market, driven by GPU servers, high-density racks, and cooling systems. Operators invest heavily in compute-intensive hardware to support AI workloads. Software and orchestration tools grow rapidly as AI users seek workload portability. Services contribute to deployment, monitoring, and lifecycle management. DCIM, AI orchestration, and hybrid management platforms enable agile scaling. Together, these components form a tightly integrated AI infrastructure layer.

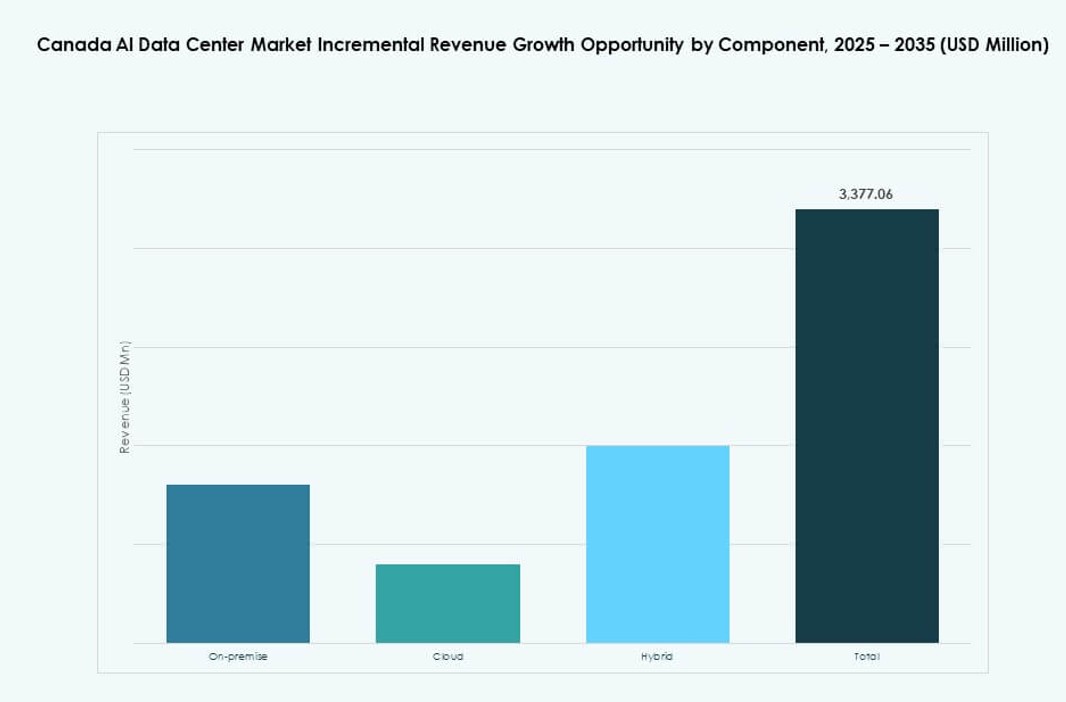

By Deployment

Cloud remains dominant due to its flexibility, scalability, and access to GPU resources. Hyperscalers expand AI offerings in public cloud zones. Hybrid deployments are rising as enterprises retain sensitive workloads on-prem. Hybrid models support compliance, cost control, and AI model customization. On-premise deployment still holds relevance in regulated sectors. Each model caters to different AI maturity levels and use cases.

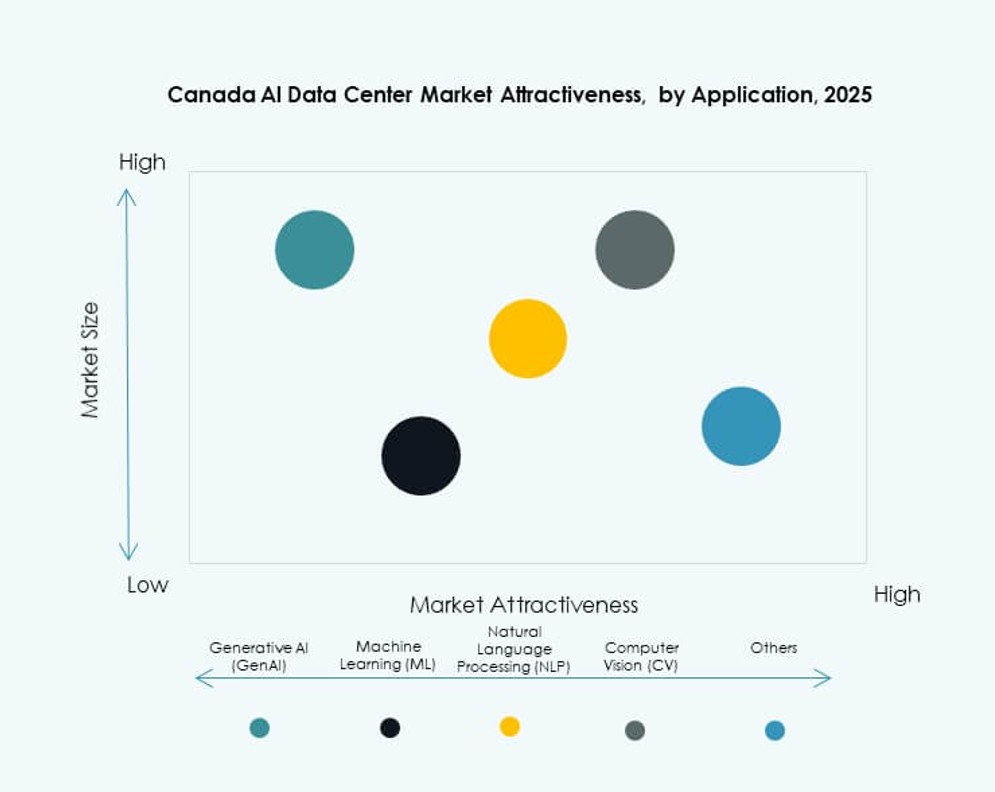

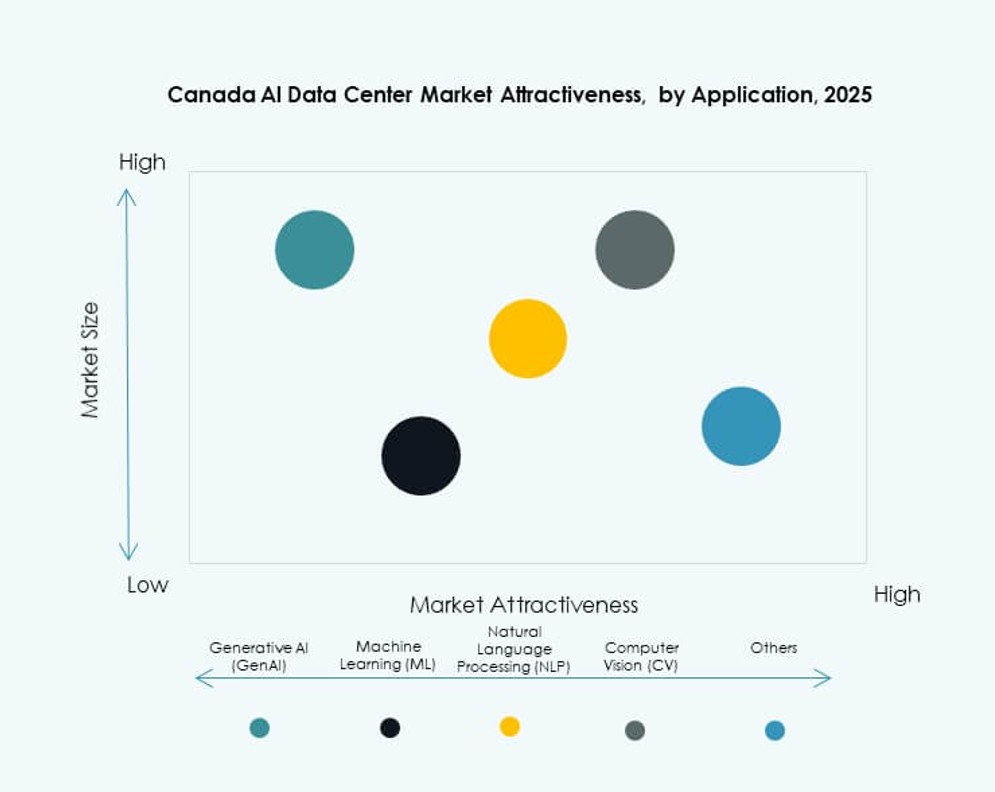

By Application

Machine learning holds the largest share, driven by predictive analytics and data modeling. Generative AI sees rapid growth with enterprise adoption of LLMs. Computer vision and NLP are rising across healthcare, automotive, and smart city deployments. Other applications include recommendation engines and fraud detection systems. The Canada AI Data Center Market supports all workloads with GPU-tuned infrastructure. AI stack diversity drives rack and cooling customization.

By Vertical

IT and telecom dominate, driven by infrastructure needs for AI service delivery. BFSI and retail follow closely, adopting AI for fraud detection and personalization. Healthcare uses AI for diagnostics and medical imaging. Media & entertainment demand AI for content generation and streaming optimization. Manufacturing and automotive sectors use AI for automation and predictive maintenance. Vertical diversity supports sustained growth across use cases.

Regional Insights

Ontario Leading National Capacity with Toronto as the Primary AI Infrastructure Hub

Ontario holds over 42% share of the Canada AI Data Center Market. Toronto leads due to its tech talent, connectivity, and data regulations. Hyperscalers and financial institutions choose Toronto for proximity to users and AI research centers. Government support and clean power access enable dense GPU infrastructure. The region continues to attract cloud and colocation investments. It remains the core market for AI-driven deployments.

- For instance, the Canadian government announced C$240 million investment in December 2024 to support Cohere’s data center expansion in Toronto, with operations starting in 2025 to boost AI compute capacity.

Quebec Gaining Traction with Renewable Power and Competitive Electricity Pricing

Quebec accounts for nearly 28% of the market, offering abundant hydroelectric energy. Its low power rates support AI workloads requiring massive power input. Montreal has become a preferred zone for hyperscale AI training clusters. Provincial programs encourage sustainable infrastructure aligned with global ESG goals. Colocation providers expand AI-ready facilities near existing grid assets. It positions Quebec as a green AI infrastructure hub.

Western and Atlantic Provinces Emerging with Edge Deployments and Sector-Specific Use Cases

Alberta and British Columbia are growing regions with edge AI centers near energy, healthcare, and retail hubs. Combined, western provinces hold around 20% market share. Localized data processing for robotics, diagnostics, and logistics drives adoption. British Columbia’s clean energy policies align with AI infrastructure needs. The Atlantic region is emerging through public-private research partnerships and rural connectivity projects. It supports distributed AI infrastructure growth.

- For instance, AWS committed USD 17.9 billion to expand its Canadian cloud infrastructure, with ongoing investment focused on enhancing AI and compute capabilities through its Canada (Central) region based in Montreal. The initiative supports national digital infrastructure and workload demand from Western provinces.

Competitive Insights:

- eStruxture Data Centers

- QScale

- Cologix

- Microsoft (Azure)

- Amazon Web Services (AWS)

- Google Cloud

- Meta Platforms

- NVIDIA

- Equinix

- Digital Realty Trust

The Canada AI Data Center Market is competitive, with strong participation from global cloud providers and domestic operators. Hyperscalers such as AWS, Microsoft, and Google drive major investments in GPU-ready infrastructure to support AI workloads. Local firms like QScale and eStruxture focus on regional coverage, sustainability, and low-latency delivery. Meta and NVIDIA contribute to demand through AI cluster deployment and hardware supply. Colocation players like Equinix and Digital Realty expand capacity for AI clients seeking hybrid models. Hardware vendors such as Dell, HPE, and Lenovo enable rapid infrastructure scaling. It is shaped by specialization, regional availability, and support for AI training and inference. Operators compete on energy efficiency, rack density, cooling performance, and compliance with data regulations.

Recent Developments:

- In December 2025, Microsoft announced a landmark CAD 19 billion investment (including over CAD 7.5 billion in the next two years) to expand its Azure Canada Central and Canada East datacentre regions for AI infrastructure, with new capacity coming online in late 2026

- In July 2025, eStruxture Data Centers secured C$1.35 billion in groundbreaking financing, including Canada’s first rated asset-only data center securitization, to accelerate AI-ready data center developments across the country.

- In October 2024, eStruxture Data Centers confirmed plans for a CAD 750 million (USD 585 million) 90 MW data center near Calgary, designed to support generative AI and cloud workloads, marking a significant expansion in Alberta’s AI infrastructure.